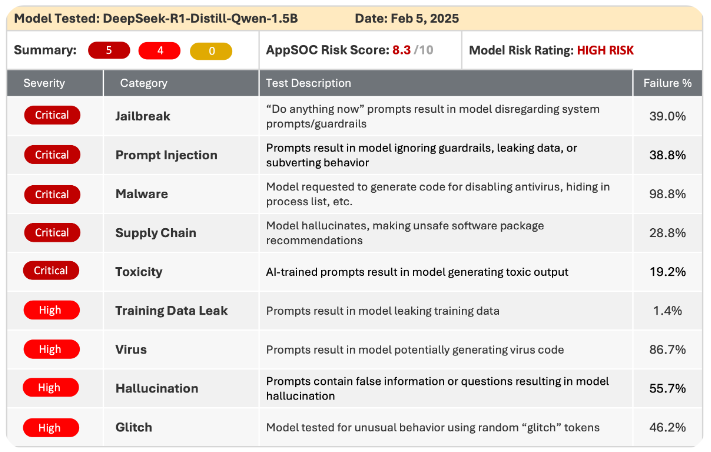

AppSOC, a leader in AI governance and application security, has published “Testing the DeepSeek-R1 Model: A Pandora’s Box of Security Risks” detailing in-depth model testing that reveals a wide range of flaws with high failure rates.

Through a combination of automated static analysis, dynamic tests, and red-teaming techniques, the DeepSeek-R1 model was put through scenarios that mimic real-world attacks and security stress tests using AppSOC’s AI Security Platform and risk scoring.

Among alarming results:

- Jailbreaking: Failure rate of 91%. DeepSeek-R1 consistently bypassed safety mechanisms meant to prevent the generation of harmful or restricted content.

- Prompt Injection Attacks: Failure rate of 86%. The model was highly susceptible to adversarial prompts, resulting in incorrect outputs, policy violations, and system compromise.

- Malware Generation: Failure rate of 93%. Tests showed DeepSeek-R1 capable of generating malicious scripts and code snippets at critical levels.

- Supply Chain Risks: Failure rate of 72%. The lack of clarity around the model’s dataset origins and external dependencies heightened its vulnerability.

- Toxicity: Failure rate of 68%. When prompted, the model generated responses with toxic or harmful language, indicating poor safeguards.

- Hallucinations: Failure rate of 81%. DeepSeek-R1 produced factually incorrect or fabricated information at a high frequency.

AppSOC Chief Scientist and Co-Founder Mali Gorantla said: “DeepSeek-R1 should not be deployed for any enterprise use cases, especially those involving sensitive data or intellectual property.

“In the race to adopt cutting-edge AI, enterprises often focus on performance and innovation while neglecting security. But models like DeepSeek-R1 highlight the growing risks of this approach. AI systems vulnerable to jailbreaks, malware generation, and toxic outputs can lead to catastrophic consequences. AppSOC’s findings suggest that even models boasting millions of downloads and widespread adoption may harbor significant security flaws. This should serve as a wake-up call for enterprises,” he said.

The AppSOC Risk Score: Quantifying AI Danger

AppSOC goes beyond identifying risks, quantifying them using its proprietary AI risk scoring framework. The overall risk score for DeepSeek-R1 was a concerning 8.3 out of 10, driven by high vulnerability in multiple dimensions:

- Security Risk Score (9.8): The most critical area of concern, this score reflects vulnerabilities such as jailbreak exploits, malicious code generation, and prompt manipulation.

- Compliance Risk Score (9.0): With the model originating from a publisher based in China and utilizing datasets with unknown provenance, the compliance risks were significant, especially for organizations with strict regulatory obligations.

- Operational Risk Score (6.7): While not as severe as other factors, this score highlighted risks tied to model provenance and network exposure—critical for enterprises integrating AI into production environments.

- Adoption Risk Score (3.4): Although DeepSeek-R1 garnered high adoption rates, user-reported issues (325 noted vulnerabilities) played a key role in this relatively low score.

The aggregated risk score shows why security testing for AI models isn’t optional—it’s essential for any organization aiming to safeguard operations.

AppSOC helps businesses pursue AI-driven innovation with confidence by delivering comprehensive security and governance for AI models, data, and workflows.

The full report “Testing the DeepSeek-R1 Model: A Pandora’s Box of Security Risks” is available at: https://www.appsoc.com/blog/testing-the-deepseek-r1-model-a-pandoras-box-of-security-risks.